Can we expand our moral circle towards an empathic civilization?

June 18, 2012 6 Comments

The Royal Society for the encouragement of Arts, Manufactures and Commerce (or RSA for short) hosts numerous speakers on ideas and actions for a 21st century enlihtenment. They upload many of these talks on their YouTube channel to which I am recent subscriber. I particularly enjoy their series of RSA Animate segments where an artist draws a beautiful white board sketch of the talk as it is being presented (usually filled with lots of visual puns and extra commentary). As a way to introduce our readers to RSA Animate, I thought I would share the talk entitled “The Empathic Civilisation” by Jeremy Rifkin:

Rifkin highlights three aspects of empathy: (1) the activity of mirror neurons, (2) soft-wiring of humans for cooperative and empathic traits, and (3) the expansion of the empathic circle from blood ties to nation-sates to (hopefully) the whole biosphere. There is a reason that I chose the word “circle” for the third point, and that is because it reminds me of Peter Singer‘s The Expanding Circle (if you want more videos then there is an interesting interview about the expanding circle). In 1981, Singer postulated that the extended range of cooperation and altruism is driven by an expanding moral (or empathic) circle. He sees no reason for this drive to cease before we reach a moral circle that includes all humans or even the biosphere; this would go past ethnic, religious, and racial lines.

One of my few hammers is evolutionary game theory, and points (2) and (3) become obvious nails. The soft-wiring towards empathy can be looked at in the framework of objective and subjective rationality that Marcel and I are developing; I might address this point in a later post. For now, I want to focus on this idea of the expanding circle since these ideas relate closely to our work on the evolution of ethnocentrism.

Moral circles do not expand in simple tag-based models

If I recall correctly, it was Laksh Puri that first brought Singer’s ideas to my attention. Laksh thought that our computational models for the evolution of ethnocentrism, could be adapted to study the evolutionary basis of morality as proposed by Singer. In 2008, I modified some of our code to test this idea. In particular, I took the Hammond and Axelrod (2006) model of evolution of ethnocentrism and built in an idea of super- (as I called them) or hierarchical (as Tom and Laksh called them) tags.

In the standard model, agents are endowed with an arbitrary integer which acts as a tag, and two strategies: one for the in-group (same tag), and one for the out-group (different tag). I was working with a 6-tag population: we can think of these tags as the integers 0, 1, 2, 3, 4, 5, and 6. To expand a circle means to consider more people as part of your in-group; to me this sounded like coarse grained tag perception. To implement this I did the easiest thing possible, and introduced an extra coarseness or mod parameter (1,2,3, or 6) which corresponded to the ability of agents to distinguish tags. In particular, a mod-6 agent could distinguish all tags, and thus if he was a tag-0 agent, he would know that tag-1, tag-2, tag-3, tag-4, and tag-5 were all different from him. A mod-3 agent on the other hand, would test for tag equality using modular arithmetic with base 3. Thus a tag-0 mod-3 agent would think that both tag-0 and tag-3 agents are part of his in-group (since ); similarly for mod-2. For mod-1 everyone would look like the in-group. Therefore, a mod-1 ethnocentric is equivalent to a humanitarian, and a mod-1 traitor is equivalent to a selfish agent, and counted as such in the simulation results.

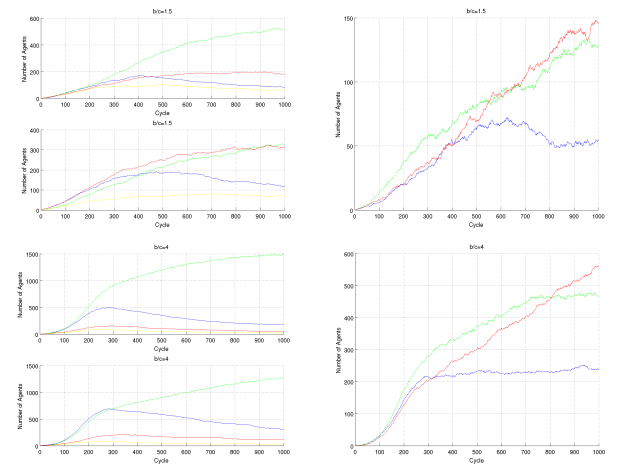

As the section title suggests, the simulation results did not support the idea of an expanding circle. The more coarse-grained tags did not fare well compared to the mod-6 agents and their ability for fine distinction. The interaction was the prisoner’s dilemma, and I ran 30 simulations with two conditions (allowing supertags and not) for four different $\frac{b}{c}$ ratios: 1.5, 2, 2.5, 3, and 4. I present here the results for 1.5 and 4. Unfortunately I did not bother to plot the error bars like I usually do, but they were relatively tight.

On the left we have the proportion of strategies with humanitarians in blue, ethnocentrics in green, traitors in yellow, and selfish in red. From top to bottom, we have no supertags and b/c = 1.5; supertags, b/c = 1.5; no supertags, b/c = 4.0; and, supertags, b/c = 4.0.

On the right we have the break down by mod of the ethnocentric agent in the supertag cases. In red is mod-6, green is mod-3, and blue is the most coarse grained mod-2. From top to bottom: supertags with b/c = 1.5; and supertas with b/c = 4.0.

All results are averages from 30 independent runs.

In both the low, and high ratio conditions, most of the ethnocentric agents tend towards being the most discriminating possible: mod-6 (the red lines on the right figures). For

it is also clear that the population is not nearly as effective at suppressing selfish agents. In the

case, it seems that supertags sustain a higher level of humanitarian agents, but it is not clear to me that this trend would remain if I ran the simulations longer. The real test is to see if there is more cooperation.

Plots of the proportion of cooperative interactions. On the left is the b/c = 1.5 case and on the right is 4.0. For both plots, the blue line is the condition with supertags, and the black is without. Results are averages from 30 runs. Error bars are omitted, but in both conditions the black line is highter than the blue by a statistically significant margin.

Here, in both conditions there is fewer cooperative interactions when super-tags are allowed. The marginal increases in coarse-graining and numbers of humanitarians do not result in a more cooperative world. I have observed the same trade-off between fairness (more humanitarians) and cooperative interactions when looking at cognitive cost (Kaznatcheev, 2010). For this sort of simulation, this might very well be a general phenomena: increases in fairness produce decreases in cooperation. My central point, is that we do not see a strong expansion of our in-group circle. Further — even if we do see such an increase — it might at the cost of cooperation. It seems that evolution favors the most fine-grained perception of tags; there is no strong drive to expand our circle towards an empathic civilization.

References

Hammond, R, & Axelrod, R (2006). The Evolution of Ethnocentrism Journal of Conflict Resolution, 50 (6), 926-936 : 10.1177/0022002706293470

Kaznatcheev, A. (2010). The cognitive cost of ethnocentrism. In S. Ohlsson & R. Catrambone (Eds.), Proceedings of the 32nd annual conference of the cognitive science society. (pdf)