June 13, 2012

by Artem Kaznatcheev

Ethnocentrism is the tendency to favor one’s own group at the expense of others; a bias towards those similar to us. Many social scientists believe that ethnocentrism derives from cultural learning and depends on considerable social and cognitive abilities (Hewstone, Rubin, & Willis, 2002). However, the only fundamental requirement for implementing ethnocentrism is categorical perception. This minimal cognition already merits a rich analysis (Beer, 2003) but is only one step above always cooperating or defecting. Thus, considering strategies that can discriminate in-groups and out-groups is one of the first steps in following the biogenic approach (Lyon, 2006) to game theoretic cognition. In other words, by studying ethnocentrism from an evolutionary game theory perspective, we are trying to follow the bottom-up approach to rationality. Do you know other uses of evolutionary game theory in the cognitive sciences?

The model I am most familiar with for looking at ethnocentrism (in biology circles, usually called the green-beard effect) is Hammond & Axelrod (2006) agent-based model. I present and outline of the model (with my slight modifications) and some basic results.

Model

The world is a square toroidal lattice. Each cell has four neighbors: east, west, north, south. A cell can be inhabited or uninhabited by an agent. The agent (say Alice) is defined by 3 traits: the cell she inhabits; her strategy; and her tag. The tag is an arbitrary quality, and Alice can only perceive if she has the same tag as Bob (the agent she is interacting with) or that their tags differ. When I present this, I usually say that Alice thinks she is a circle and perceives others with the same tag as circles, but those with a different tag as squares. This allows her to have 4 strategies:

The blue Alice cooperates with everybody, regardless of tag; she is a humanitarian. The green Alice cooperates with those of the same tag, but defects from those with a different; she is ethnocentric. The other two tags follow a similar pattern and are traitorous (yellow) and selfish (red). The strategies are deterministic, but we saw earlier that a mixed-strategy approach doesn’t change much.

The simulations follow 3 stages (as summarized in the picture below):

- Interaction – agents in adjacent cells play the game between each other (usually a prisoner’s dilemma). Choosing to cooperate or defect for each pair-wise interaction. The payoffs of the games are added to their base probability to reproduce (ptr) to arrive at each agents actual probability to reproduce.

- Reproduction – each agent rolls a die in accordance to their probability to reproduce. If they succeed then they produce an offspring which is placed in a list of children-to-be-placed

- Death and Placement – each agent on the lattice has a constant probability of dying and vacating their cell. The children-to-be-placed list is randomly permuted and we try to place each child in a cell adjacent to (or in place of) their parent if one is empty. If no empty cell is found, then the child dies

The usual tracked parameters is the distribution of strategies (how many agents follow each strategy) and the proportion of cooperative interactions (the fraction of interactions where both parties chose to cooperate). The world starts with a few agents of each strategy-tag combination and fills up over time.

Results

The early results on the evolution of ethnocentrism are summarized in the following plot.

Number of agents grouped by strategy versus evolutionary cycle. Humanitarians are blue, ethnocentrics are green, traitorous are yellow, and selfish are red. The results are an average of 30 runs of the H&A model (default ptr = 0.1; death = 0.1; b = 0.025; c = 0.01) with line thickness representing the standard error. The boxes highlight the nature of early results on the H&A model.

Hammond and Axelrod (2006) showed that, after a transient period, ethnocentric agents dominate the population; humanitarians are the second most common, and traitorous and selfish agents are both extremely uncommon. Shultz, Hartshorn, and Hammond (2008) examined the transient period to uncover evidence for early competition between ethnocentric and humanitarian strategies. Shultz, Hartshorn, and Kaznatcheev (2009) focused on explaining the mechanism behind ethnocentric dominance over humanitarians, and observed the co-occurrence of world saturation and humanitarian decline. Kaznatcheev and Shultz (2011) concluded that it is the spatial aspect of the model that creates cooperation; being able to discriminate tags helps maintain cooperation and extend the range of parameters under which it can occur.

As you might have noticed from the simple DFAs drawn in the strategies figure, ethnocentrism and traitorous agents are more complicated than humanitarians or selfish; they are more cognitively complex. Kaznatcheev (2010a) showed that ethnocentrism is not robust to increases in the cost of cognition. Thus, in humans (or simpler organisms) the mechanism allowing discrimination has to have been in place already (and not co-evolved) or be very inexpensive. Kaznatcheev (2010a) also observed that ethnocentrics maintain higher levels of cooperation than humanitarians. Thus, although ethnocentrism seems unfair due to its discriminatory nature, it is not clear that it produces a less friendly world.

The above examples dealt with the prisoner’s dilemma (PD) which is a typical model of a competitive environment. In the PD cooperation is irrational, so ethnocentrism allowed the agents to cooperate irrationally (thus moving over to the better social payoff), while still treating those of a different culture rationally and defecting from them.

Unfortunately, Kaznatcheev (2010b) demonstrated that ethnocentric behavior is robust across a variety of games, even when out-group hostility is classically irrational (the harmony game). In the H&A model, ethnocentrism is a two-edged sword: it can cause unexpected cooperative behavior, but also irrational hostility.

References

Beer, R. D. (2003). The dynamics of active categoricalperception in an evolved model agent. Adaptive

Behavior, 11, 209-243.

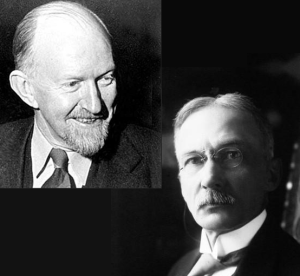

Hammond, R., & Axelrod, R. (2006). The Evolution of Ethnocentrism Journal of Conflict Resolution, 50 (6), 926-936 DOI: 10.1177/0022002706293470

Hewstone, M., Rubin, M., & Willis, H. (2002). Intergroup bias. Annual Review of Psychology, 53, 575-604.

Kaznatcheev, A. (2010a). The cognitive cost of ethnocentrism. In S. Ohlsson & R. Catrambone (Eds.), Proceedings of the 32nd annual conference of the cognitive science society. (pdf)

Kaznatcheev, A. (2010b). Robustness of ethnocentrism to changes in inter-personal interactions. Complex Adaptive Systems – AAAI Fall Symposium. (pdf)

Kaznatcheev, A., & Shultz, T.R. (2011). Ethnocentrism Maintains Cooperation, but Keeping One’s Children Close Fuels It. In L. Carlson, C, Hoelscher, & T.F. Shipley (Eds), Proceedings of the 33rd annual conference of the cognitive science society. (pdf)

Lyon, P. (2006). The biogenic approach to cognition. Cognitive Processing, 7, 11-29.

Shultz, T. R., Hartshorn, M., & Hammond, R. A. (2008). Stages in the evolution of ethnocentrism. In B. Love, K. McRae, & V. Sloutsky (Eds.), Proceedings of the 30th annual conference of the cognitive science society.

Shultz, T. R., Hartshorn, M., & Kaznatcheev, A. (2009). Why is ethnocentrism more common than humanitarianism? In N. Taatgen & H. van Rijn (Eds.), Proceedings of the 31st annual conference of the cognitive science society.

Interface theory of perception can overcome the rationality fetish

January 28, 2014 by Artem Kaznatcheev 34 Comments

I might be preaching to the choir, but I think the web is transformative for science. In particular, I think blogging is a great form or pre-pre-publication (and what I use this blog for), and Q&A sites like MathOverflow and the cstheory StackExchange are an awesome alternative architecture for scientific dialogue and knowledge sharing. This is why I am heavily involved with these media, and why a couple of weeks ago, I nominated myself to be a cstheory moderator. Earlier today, the election ended and Lev Reyzin and I were announced as the two new moderators alongside Suresh Venkatasubramanian, who is staying on to for continuity and to teach us the ropes. I am extremely excited to work alongside Suresh and Lev, and to do my part to continue devoloping the great community that we nurtured over the last three and a half years.

Thankfully, being a moderator on cstheory does not change my status elsewhere on the website, so I can continue to be a normal argumentative member of the Cognitive Sciences StackExchange. That site is already home to one of my most heated debates against the rationality fetish. In particular, I was arguing against the statement that “a perfect Bayesian reasoner [is] a fixed point of Darwinian evolution”. This statement can be decomposed into two key assumptions: a (1) perfect Bayesian reasoner makes the most veridical decisions given its knowledge, and (2) veridicity has greater utility for an agent and will be selected for by natural selection. If we accept both premises then a perfect Bayesian reasoner is a fitness-peak. Of course, as we learned before: even if something is a fitness-peak doesn’t mean we can ever find it.

We can also challenge both of the assumptions (Feldman, 2013); the first on philosophical grounds, and the second on scientific. I want to concentrate on debunking the second assumption because it relates closely to our exploration of objective versus subjective rationality. To make the discussion more precise, I’ll approach the question from the point of view of perception — a perspective I discovered thanks to TheEGG blog; in particular, the comments of recent reader Zach M.

Read more of this post

Filed under Commentary, Preliminary, Reviews Tagged with bayesian, cognitive cost, cognitive science, rationality, stackexchange, video