October 22, 2012

by Artem Kaznatcheev

Last week, we saw how to deal with two-strategy games in finite inviscid populations. Unfortunately, two strategy games are not adequate to model many of the interactions we might be interested in. In particular, we cannot use Antal et al. (2009a) to look at bifurcations and finitary/stochastic effects in tag-based models of ethnocentrism, at least not without some subtle tricks. In this post we are going to look at a more general approach for any  -strategy game developed by Antal et al. (2009b). We will apply these tools to look at a new problem for the paper, but an old problem for the blog: ethnocentrism.

-strategy game developed by Antal et al. (2009b). We will apply these tools to look at a new problem for the paper, but an old problem for the blog: ethnocentrism.

Antal et al. (2009b) consider a large but finite population of size  and a game

and a game  with

with  strategies. For update rule, they focus on the frequency dependent Moran process, although their results also hold for Wright-Fisher, and pairwise comparison (Fermi rule). Unlike the previous results for two-strategy games, the present work is applicable only in the limit of weak selection. Mutations are assumed to be uniform with probability

strategies. For update rule, they focus on the frequency dependent Moran process, although their results also hold for Wright-Fisher, and pairwise comparison (Fermi rule). Unlike the previous results for two-strategy games, the present work is applicable only in the limit of weak selection. Mutations are assumed to be uniform with probability  : a mutant is any one of the

: a mutant is any one of the  strategies with equal probabilities. However, in section 4.2, the authors provide a cute argument for approximating non-uniform but parent-independent mutation rates by repeating strategies in proportion to the likelihood of mutation into them. Much like the two-strategy case, the authors derive separate equations for low and high mutation rates, and then provide a way to interpolate between the two extremes for intermediate mutation rates.

strategies with equal probabilities. However, in section 4.2, the authors provide a cute argument for approximating non-uniform but parent-independent mutation rates by repeating strategies in proportion to the likelihood of mutation into them. Much like the two-strategy case, the authors derive separate equations for low and high mutation rates, and then provide a way to interpolate between the two extremes for intermediate mutation rates.

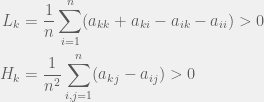

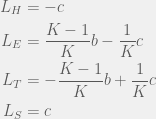

The mathematics proceeds through a perturbation analysis. The basic idea is to solve for the distribution of strategies in the drift (no selection) model, and then to gradually dial up the selection to perturb the distribution slightly into the weak selection regime. The authors use this to arrive at the following to conditions for low, and high mutation for strategy  to be favored:

to be favored:

There is an intuitive story to help understand these two conditions. In the case of low mutation, the population is usually just two strategies until one of them fixes. So for  the terms that matter are the pairwise interactions between the strategies, and since the most decisive (and entropy rich) case is near the 50-50 split in the two strategies, self interactions (

the terms that matter are the pairwise interactions between the strategies, and since the most decisive (and entropy rich) case is near the 50-50 split in the two strategies, self interactions ( ) and other-strategy interactions (

) and other-strategy interactions ( ) happen with the same frequency. I think this is where the discrepancy with Antal et. al (2009a) that we will see later sneaks in. A correction factor of

) happen with the same frequency. I think this is where the discrepancy with Antal et. al (2009a) that we will see later sneaks in. A correction factor of  should be added in front of the the self-interaction terms, but I digress.

should be added in front of the the self-interaction terms, but I digress.

For the high mutation case, all the strategies are present in the population with about the same frequency at the same time. We need to look at the transitions from this population to get our first order terms. In that case, the focal individual’s fitness is  (since all opponents are equally likely; once again, I believe a correction term is in order), and the average fitness is

(since all opponents are equally likely; once again, I believe a correction term is in order), and the average fitness is  . The difference of these two terms produces

. The difference of these two terms produces  .

.

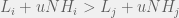

In order to interpolate, we have the following condition for strategy  to be more common than

to be more common than  for intermediate mutation rates

for intermediate mutation rates  :

:

How does this disagree with the two-strategy results of Antal et al. (2009a)? The present paper reproduces the condition of risk-dominance, with C dominating D if  , but does not produce the small

, but does not produce the small  correction of

correction of  . This would be mitigated with the observations I made earlier, but the approach of the perturbation analysis would have to be modified carefully.

. This would be mitigated with the observations I made earlier, but the approach of the perturbation analysis would have to be modified carefully.

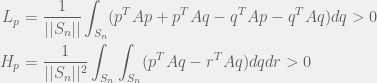

The perturbation method can be extended to mixed strategies, as is done by Tarnita et al. (2009). In that case, we just replace the summation by integrals, to get:

Where  is the n-vertex simplex with volume

is the n-vertex simplex with volume  . It is nice to know that the results generalize to mixed strategies, but not as important tool as the pure strategy variant. I will concentrate on pure strategies, although mixed might be good to revisit to study evolution of agency.

. It is nice to know that the results generalize to mixed strategies, but not as important tool as the pure strategy variant. I will concentrate on pure strategies, although mixed might be good to revisit to study evolution of agency.

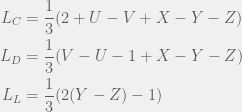

Antal et al. (2009b) showcase their method with three case studies: (i) cooperators, defectors, and loners, (ii) reversing the rank of strategies by mutation, and (iii) cooperators, defectors, and tit-for-tat. The first is the most interesting for me, since it shows how adding an irrelevant strategy, can reverse the dominance of the other two. I will present their example in a more general context for all cooperate-defect games. We will introduce an irrelevant strategy L, where irrelevance means that both C and D get the same payoff  from interacting with L and L gets

from interacting with L and L gets  from them. The self interaction payoff for L can differ, and we can set it to

from them. The self interaction payoff for L can differ, and we can set it to  :

:

The authors consider the particular case of  and

and  , and

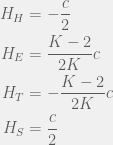

, and  . We can apply the general results to get (for small mutations):

. We can apply the general results to get (for small mutations):

We can look at at the condition for C to dominate D for small mutations ( ) to get

) to get  . If we had used

. If we had used  different irrelevant strategies (or just dialed up the proportion of mutations to an irrelevant strategy) then

different irrelevant strategies (or just dialed up the proportion of mutations to an irrelevant strategy) then  would be replaced by

would be replaced by  . This creates a new strip of cooperation which reaches into the Prisoner’s dilemma region (drawn in blue):

. This creates a new strip of cooperation which reaches into the Prisoner’s dilemma region (drawn in blue):

When we switch to large mutations, the region disappears and we recover the standard  rule. Note that this example means that, for this analysis, we cannot ignore competing strategies even if they are strictly dominated.

rule. Note that this example means that, for this analysis, we cannot ignore competing strategies even if they are strictly dominated.

Ethnocentrism in tag-based models

We will consider a strategy space of  tags, with an agent of each tag being either a humanitarian (cooperate with all), ethnocentric (cooperate with same-tag), traitorous (cooperate with oot-tags), or selfish. For the game, we will look at the usual cost-benefit representation of Prisoner’s dilemma. Note that the

tags, with an agent of each tag being either a humanitarian (cooperate with all), ethnocentric (cooperate with same-tag), traitorous (cooperate with oot-tags), or selfish. For the game, we will look at the usual cost-benefit representation of Prisoner’s dilemma. Note that the  and

and  values will be the withing a single strategy of different tags, so we only need to compute four of each:

values will be the withing a single strategy of different tags, so we only need to compute four of each:

This divides the dynamics into two regions, when  then

then  , otherwise we have

, otherwise we have  . In other words, for large enough

. In other words, for large enough  or small enough

or small enough  , ethnocentrism can be the dominant strategy in the population. This condition is in perfect agreement with the Traulsen & Nowak (2007) results we saw earlier. Although in that case, there were no H or T agents. If we remove H & T from the current analysis, we will still get the same condition for ethnocentric dominance even though we will calculate different

, ethnocentrism can be the dominant strategy in the population. This condition is in perfect agreement with the Traulsen & Nowak (2007) results we saw earlier. Although in that case, there were no H or T agents. If we remove H & T from the current analysis, we will still get the same condition for ethnocentric dominance even though we will calculate different  values.

values.

For large mutations, the advantage of ethnocentrics disappears completely, and we get:

Which for  results in the ordering

results in the ordering  . So if we have mutations that change tag and strategy together (as they do in this case) then higher mutation rates disadvantage the population, and if we let

. So if we have mutations that change tag and strategy together (as they do in this case) then higher mutation rates disadvantage the population, and if we let  be the expected number of mutants per generation, then we can see that ethnocentric cooperation is possible only if

be the expected number of mutants per generation, then we can see that ethnocentric cooperation is possible only if  or rewritten as

or rewritten as  .

.

References

Antal, T., Nowak, M.A., & Traulsen, A. (2009a). Strategy abundance in games for arbitrary mutation rates Journal of Theoretical Biology, 257 (2), 340-344.

Antal T, Traulsen A, Ohtsuki H, Tarnita CE, & Nowak MA (2009b). Mutation-selection equilibrium in games with multiple strategies. Journal of Theoretical Biology, 258 (4), 614-22 PMID: 19248791

Tarnita, C.E., Antal, T., Nowak, M.A. (2009) Mutation-selection equilibrium in games with mixed strategies. Journal of Theoretical Biology 26(1): 50-57.

Traulsen A, & Nowak MA (2007). Chromodynamics of cooperation in finite populations. PLoS One, 2 (3).

individuals and

sets, where each individual can belong to

of the sets. Strategy and set membership are heritable (with mutation probabilities

and

, respectively), and interactions are only with agents that share a set (if two agents share more than one set then they interact more than once). However, reproduction is inviscid: a random individual is selected to die and everybody competes to replace them with a child. This set-dependent interaction, makes the model equivalent to the earliest models of ethnocentrism, but the model is not equivalent to more modern approaches to ethnocentrism. Since sets cannot reproduce and migration (through mutation) between sets is purely random, the model also cannot capture group selection. However, cooperation for the Prisoners’ dilemma still emerges in this model, if we have:

is the population-scaled set mutation rate, even when this is zero we get cooperation when

. Alternatively, to simplify we can take the limit of

to get:

) and take the further limit of large populations

then this becomes a very simple:

of:

is a structural constant given by:

), and

becomes:

, it is more enlightening to look at the reciprocal that in the limit of large

becomes:

![]() Tarnita, C., Antal, T., Ohtsuki, H., & Nowak, M. (2009). Evolutionary dynamics in set structured populations Proceedings of the National Academy of Sciences, 106 (21), 8601-8604 DOI: 10.1073/pnas.0903019106

Tarnita, C., Antal, T., Ohtsuki, H., & Nowak, M. (2009). Evolutionary dynamics in set structured populations Proceedings of the National Academy of Sciences, 106 (21), 8601-8604 DOI: 10.1073/pnas.0903019106

Mutation-bias driving the evolution of mutation rates

March 31, 2016 by Julian Xue 4 Comments

In classic game theory, we are often faced with multiple potential equilibria between which to select with no unequivocal way to choose between these alternatives. If you’ve ever heard Artem justify dynamic approaches, such as evolutionary game theory, then you’ve seen this equilibrium selection problem take center stage. Natural selection has an analogous ‘problem’ of many local fitness peaks. Is the selection between them simply an accidental historical process? Or is there a method to the madness that is independent of the the environment that defines the fitness landscape and that can produce long term evolutionary trends?

Two weeks ago, in my first post of this series, I talked about an idea Wallace Arthur (2004) calls “developmental bias”, where the variation of traits in a population can determine which fitness peak the population evolves to. The idea is that if variation is generated more frequently in a particular direction, then fitness peaks in that direction are more easily discovered. Arthur hypothesized that this mechanism can be responsible for long-term evolutionary trends.

A very similar idea was discovered and called “mutation bias” by Yampolsky & Stoltzfus (2001). The difference between mutation bias and developmental bias is that Yampolsky & Stoltzfus (2001) described the idea in the language of discrete genetics rather than trait-based phenotypic evolution. They also did not invoke developmental biology. The basic mechanism, however, was the same: if a population is confronted with multiple fitness peaks nearby, mutation bias will make particular peaks much more likely.

In this post, I will discuss the Yampolsky & Stoltzfus (2001) “mutation bias”, consider applications of it to the evolution of mutation rates by Gerrish et al. (2007), and discuss how mutation is like and unlike other biological traits.

Read more of this post

Filed under Commentary, Models, Reviews Tagged with evolution, mutation, supply driven evolution