Introduction to Algorithmic Biology: Evolution as Algorithm

June 1, 2019 6 Comments

As Aaron Roth wrote on Twitter — and as I bet with my career: “Rigorously understanding evolution as a computational process will be one of the most important problems in theoretical biology in the next century. The basics of evolution are many students’ first exposure to “computational thinking” — but we need to finish the thought!”

Last week, I tried to continue this thought for Oxford students at a joint meeting of the Computational Society and Biological Society. On May 22, I gave a talk on algorithmic biology. I want to use this post to share my (shortened) slides as a pdf file and give a brief overview of the talk.

If you didn’t get a chance to attend, maybe the title and abstract will get you reading further:

Algorithmic Biology: Evolution is an algorithm; let us analyze it like one.

Evolutionary biology and theoretical computer science are fundamentally interconnected. In the work of Charles Darwin and Alfred Russel Wallace, we can see the emergence of concepts that theoretical computer scientists would later hold as central to their discipline. Ideas like asymptotic analysis, the role of algorithms in nature, distributed computation, and analogy from man-made to natural control processes. By recognizing evolution as an algorithm, we can continue to apply the mathematical tools of computer science to solve biological puzzles – to build an algorithmic biology.

One of these puzzles is open-ended evolution: why do populations continue to adapt instead of getting stuck at local fitness optima? Or alternatively: what constraint prevents evolution from finding a local fitness peak? Many solutions have been proposed to this puzzle, with most being proximal – i.e. depending on the details of the particular population structure. But computational complexity provides an ultimate constraint on evolution. I will discuss this constraint, and the positive aspects of the resultant perpetual maladaptive disequilibrium. In particular, I will explain how we can use this to understand both on-going long-term evolution experiments in bacteria; and the evolution of costly learning and cooperation in populations of complex organisms like humans.

Unsurprisingly, I’ve writen about all these topics already on TheEGG, and so my overview of the talk will involve a lot of links back to previous posts. In this way. this can serve as an analytic linkdex on algorithmic biology.

One of my students, Joe Gardner, invited me to give this talk. Together with Ben Slater, he was excited about my recent paper on computational complexity as an ultimate constraint on evolution. They thought that other students would also be interested, and that this could be a good way to bring the Computational Society and Biological Society together for a joint event.

I was more than happy to participate.

But given the technical nature of some of my work, I wanted to focus on a broad overview of algorithmic biology and evolution. And only briefly touch on my specific work at the end. The talk ended up having four main parts:

- Introduction: the spectrum from computational to algorithmic

- Natural Selection: Struggle for Existence as the constraint that powers evolution

- Machines and Nature: from Steam Engines to Computers

- Algorithmic Biology: Computational Complexity as the constraint that powers open-ended evolution

In terms of the actual content, the talk followed the ideas sketched in the following posts from TheEGG:

Quick introduction: the algorithmic lens (March 29, 2019)

Most people are familiar with a particular form of interaction between computer science and biology — what can be broadly classified as computational biology. This is areas like bioinformatics, agent-based modeling of evolution, and maybe even extending to topics like genetic programming and genetic algorithms. But this isn’t the only aspect of the boundary between computer science and biology. This is just practical skills from computer science applied to the outputs of biology. A complementary approach is the use of mathematical techniques from computer science to build the conceptual grounding of biology. This was the aspect that I wanted to focus the talk on.

For this, I needed to give some history of evolution:

British agricultural revolution gave us evolution by natural selection (May 25, 2019)

If we look at the origins of evolution by natural selection, we can find a strong motivating factor from technology. One of the technological achievements of Darwin’s time was rapid improvements in animal husbandry and agriculture. In the above post, I make the case that this technology was an essential part of the inspiration for Darwin’s foundational insights. Darwin looked at selection implemented by Robert Bakewell and asked if instead it can be implemented by a non-human agent — an abstract agent like the struggle for existence.

Darwin as an early algorithmic biologist (August 4th, 2018)

In doing this, Darwin was being an early algorithmic biologist. He was recognizing the importance of multiple-realizability and the fact that algorithms can be implemented in a distributed manner. In viewing the struggle for existence as the implementing agent for natural selection, Darwin was also using asymptotic analysis: seeing the qualitatively difference in growth rate between the constant or polynomially increasing abundance of resources versus the exponential growth of populations.

Fitness landscapes as mental and mathematical models of evolution (August 16th, 2013)

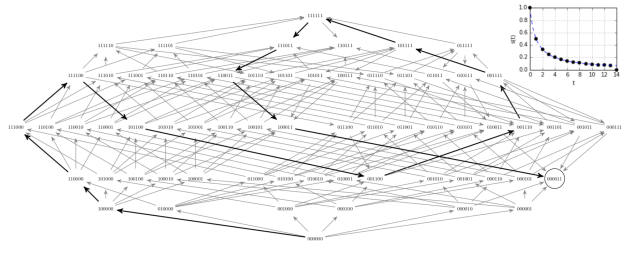

To make this process of evolution easier to think about and model, in the century after Darwin, Wright developed the fitness landscape metaphor. The discrete aspect of the metaphor is especially useful if we want to incorporate the discrete elements of Mendelian genetics — something that was foreign to Darwin, but is an essential part of our current biological thought. But fitness landscapes also raise an issue: why aren’t all species just stuck at local fitness peaks? Why do we see any evolution happening at all?

Darwin and Wallace were geologists, so they overcome the local peak problem by having the environment constantly change. For them the world is constantly changing at the geological level and thus geological change gets reflected in the biological world. This is almost certainly a great explanation for a naturalist, but an experimentalist can throw a wrench in this reasoning. Richard Lenski has done this by evolving E. coli for (now) 70,000 generations in a static environment. They still haven’t found a fitness peak and continue to increase in fitness. Lenski and colleagues see open-ended evolution.

This is part of what I aim to explain.

From perpetual motion machines to the Entscheidungsproblem (March 9th, 2019)

But before explaining open-ended evolution, it is important to understand the ideas of multiple realizability and abstraction. This can be illustrated by turning to the other big new technology of Darwin’s time: steam engines.

If today someone came to you with plans for a perpetual motion machine, you would know that those plans are wrong without even examining them. This is due to foundational role that thermodynamics has achieved. We don’t need to know the details of the proposed machine to know that it would have to violate the laws of physics as we know them to achieve its result.

Computational complexity can achieve a similar foundational reach. Just like we don’t need to think about thermodynamics as about steam engines, we don’t need to see computational complexity as about computers. Any system can be viewed an analyzed as an algorithm — including evolution. And thus computational constrants can be applied to evolution.

Most importantly, this means that we should analyze evolution as an algorithm.

And if we can’t analyze the algorithm then shouldn’t attribute random properties to it that ‘feel right’, seem obvious on one step case, or that we want to be true. That’s not good to practice when we’re programming and it’s not good practice when we’re doing science, either.

In particular, this means that we should shift some of our metaphors for fitness landscapes from mountain ranges to mazes.

Proximal vs ultimate constraints on evolution (July 24th, 2018)

In evolutionary biology, constraints are what prevent evolution from finding peaks (or exits in the maze metaphor) in fitness landscapes. These constraints can be caused by various factors. By analogy to the distinction between algorithms vs problems, I divide the constraints into two types: proximal vs ultimate.

Most of the constraints on evolution familiar to biologists are proximal. They are due to features of the particular population, like population or developmental structure, trait co-variants, standing variation, etc. In contrast, computational complexity is an ultimate constraint: it applies to any population on a given family of landscapes.

From this background, I could describe my recent results:

Computational Complexity as an Ultimate Constraint on Evolution

This involves thinking about the different kinds of epistasis and corresponding landscapes from smooth to semismooth to rugged. How we can get progressively harder landscapes if we have more freedom on the kind of epistasis that occurs. And how this resultant perpetual maladaptive disequilibrium can be transformed into positive results like solutions to the Baldwin effect for costly learning, or making permanent cooperation due to the Hankshaw effect.

For now, I think that the best summary of these results is still my 25 tweet thread on this paper. But maybe I should consider writing a more linkable post in the future.

Pingback: Span programs as a linear-algebraic representation of functions | Theory, Evolution, and Games Group

My favorite line in your twitter storm. Regarding hard landscapes, selection coefficients decay as a power law, but can’t decay as fast as exponential.

Pingback: The gene-interaction networks of easy fitness landscapes | Theory, Evolution, and Games Group

Pingback: Idealization vs abstraction for mathematical models of evolution | Theory, Evolution, and Games Group

Pingback: Web of C-lief: conjectures vs. model assumptions vs. scientific beliefs | Theory, Evolution, and Games Group

Pingback: The science and engineering of biological computation: from process to software to DNA-based neural networks | Theory, Evolution, and Games Group