Baldwin effect and overcoming the rationality fetish

October 6, 2013 23 Comments

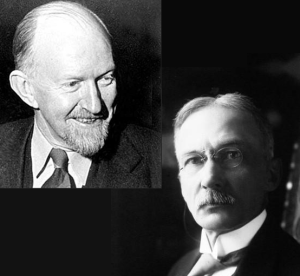

Introduced in 1896 by psychologist J.M. Baldwin then named and reconciled with the modern synthesis by leading paleontologist G.G. Simpson (1953), the Simpson-Baldwin effect posits that “[c]haracters individually acquired by members of a group of organisms may eventually, under the influence of selection, be reenforced or replaced by similar hereditary characters” (Simpson, 1953). More explicitly, it consists of a three step process (some of which can occur in parallel or partially so):

- Organisms adapt to the environment individually.

- Genetic factors produce hereditary characteristics similar to the ones made available by individual adaptation.

- These hereditary traits are favoured by natural selection and spread in the population.

The overall result is that originally individual non-hereditary adaptation become hereditary. For Baldwin (1886,1902) and other early proponents (Morgan 1886; Osborn 1886, 1887) this was a way to reconcile Darwinian and strong Lamarkian evolution. With the latter model of evolution exorcised from the modern synthesis, Simpson’s restatement became a paradox: why do we observe the costly mechanism and associated errors of individual learning, if learning does not enhance individual fitness at equilibrium and will be replaced by simpler non-adaptive strategies? This encompass more specific cases like Rogers’ paradox (Boyd & Richerson, 1985; Rogers, 1988) of social learning.

Read more of this post

Pairing tools and problems: a lesson from the methods of mathematics and the Entscheidungsproblem

March 16, 2015 by Artem Kaznatcheev 21 Comments

Three weeks ago it was my lot to present at the weekly integrated mathematical oncology department meeting. Given the informal setting, I decided to grab one gimmick and run with it. I titled my talk: ‘2’. It was an overview of two recent projects that I’ve been working on: double public goods for acid mediated tumour invasion, and edge

effects in game theoretic dynamics of solid tumours. For the former, I considered two approximations: the limit as the number n of interaction partners is large and the limit as n = 1 — so there are two interacting parties. But the numerology didn’t stop there, my real goal was to highlight a duality between tools or techniques and the problems we apply them to or domains we use them in. As is popular at the IMO, the talk was live-tweeted with many unflattering photos and this great paraphrase (or was it a quote?) by David Basanta from my presentation’s opening:

Since I was rather sleep deprived from preparing my slides, I am not sure what I said exactly but I meant to say something like the following:

I don’t subscribe to the perspective that we should pick the best tool for the job. Instead, I try to pick the best tuple of job and tool given my personal tastes, competences, and intuitions. In doing so, I aim to push the tool slightly beyond its prior borders — usually with an incremental technical improvement — while also exploring a variant perspective — but hopefully still grounded in the local language — on some domain of interest. The job and tool march hand in hand.

In this post, I want to unpack this principle and follow it a little deeper into the philosophy of science. In the process, I will touch on the differences between endogenous and exogenous questions. I will draw some examples from my own work, by will rely primarily on methodological inspiration from pure math and the early days of theoretical computer science.

Read more of this post

Filed under Commentary Tagged with cstheory, History, philosophy of math, philosophy of mind, philosophy of science, social brain hypothesis