Ohtsuki-Nowak transform for replicator dynamics on random graphs

October 25, 2012 21 Comments

We have seen that the replicator equation can be a useful tool in understanding evolutionary games. We’ve already used it for a first look at perception and deception, and the cognitive cost of agency. However, the replicator equation comes with a number of inherent assumptions and limitations. The limitation Hisashi Ohtsuki and Martin Nowak wanted to address in their 2006 paper “The replicator equation on graphs” is the assumption of a totally inviscid population. The goal is to introduce a morsel of spatial structure, while still retaining the analytic tractability and easy-of-use of replicator dynamics.

Replicator dynamics assume that every individual in population is equally likely to interact with every other individual in the population. However, in most settings the possible interactions are not as simple. Usually, there is some sort of structure to the population. The way we usually model structure, is by representing individuals as vertexes in a graph, and if two vertexes are adjacent (connected by an edge) then the two individuals can interact. For replicator dynamics the model would be a complete graphs. Ohtsuki and Nowak consider a structure which is a bit more complicated: a random -regular graph.

There are several ways to think about random -regular graphs. We call a graph

-regular if every vertex has exactly

edges incident on it (and thus, exactly

neighbors). If we consider a sample from the uniform distribution over all

-regular graphs then we get a random

-regular graph. Of course, this is an inefficient approach, but captures the main statistical qualities of these graphs. To see how to efficiently generate a graph, take a look at Marcel’s post on generating random

-regular graphs.

With the spatial structure in place, the next important decision is the update or evolutionary dynamics to use. Ohtsuki & Nowak (2006) consider four different rules: birth-death (BD), death-birth (DB), imitation (IM), and pairwise comparison (PC). For BD, player is chosen for reproduction in proportion to fitness and the offspring replaces a neighbor chosen uniformly at random. In DB, a focal agent is chosen uniformly at random and is replaced by one of his neighbors, who are sampled randomly in proportion to fitness. IM is the same as DB, except the focal agents counts as one of its own neighbors. For PC, both the focal individual and his partner are chosen uniformly at random, but the focal agent replaces his partner with probability that depends (according to the Fermi rule) on their payoff difference, otherwise the partner replaces the focal individual.

Throughout the paper, fitness is calculated in the limit of weak selection. To account for the spatial structure, the authors use pair-approximation. This method is perfect for Bethe lattice and accurate for any graph that is locally tree like. Thankfully, random graphs are locally tree like (out to distance on the order of ) and hence the analysis is reasonable for large

. However, most real-world networks tend to have high clustering coefficients, and thus are not tree-like. For such networks, the Ohtsuki-Nowak transform would be a bad choice, but still a step closer than the completely inviscid replicator dynamics.

The stunning aspect of the paper, is that in the limit of very large populations they get an exceptionally simple rule. Give a game matrix , you just have to compute the Ohtsuki-Nowak transform

and then you recover the dynamics of your game by simply looking at the replicator equation with

. In will present the authors in slightly different notation than their paper, in order to stress the most important qualitative aspects of the transform. That the purpose I need to define the column vector

which is the diagonal of the game matrix

, i.e.

. The transform becomes:

Where is the all ones vector; thus

(

) is a matrix with diagonal elements repeated to fill each row (column). I call the last element (

) the neighborhood update parameter. Ohtsuki & Nowak show that for birth-death and pairwise comparison

, for imitation it is

, for death-birth

. I used this suggestive notation, because I think the transform can be refined to allow all values of

between

and

. In particular, I think the easiest way to achieve this is by selecting between BD and DB with some probability at each update step.

My other reason for rewriting in this condensed form is to gain an intuition for each term of the sum. The first term is the original game, and is expected to be there with some summation because we are doing a small perturbation in the limit of weak selection, thus a linear correction to is not surprising. The second term contains a difference of only diagonal elements between the focal agent and the competing strategy. Thus, the second term is local-aggregation terms and it is not surprising to see it lose strength as

increases and the focal agent has a smaller and smaller effect on its neighborhood. Ohtsuki & Nowak think of the third term as an assortment factor, however this intuition is lost on me. Hilbe (2011) studies the replicator equation with finite sampling, and achieves the same modification (with some massaging) as the third term of

which we will discuss in a future post. Thus, I find it better to think of the third term as a finite sampling term. It is then clear why the term decreases from BD to DB: the sampling neighborhood for calculating fitness is quadratically larger in the latter update rule.

As case studies, Ohtsuki & Nowak (2006) apply their transform to the prisoner’s dilemma, snowdrift, coordination, and rock-paper scissors games. I refer the interested reader to the original paper for the latter example and I will deal with the former three at once by looking at all cooperate-defect games:

Where X-Y is a new coordinate system given by the linear transform:

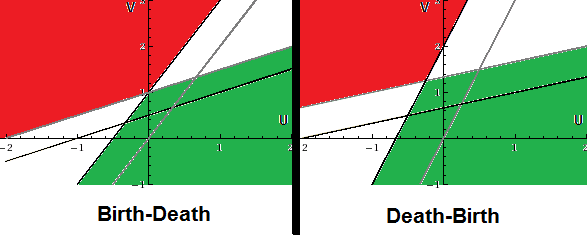

The effects of this coordinate transform on UV-space can be seen in the figure above for . The green region is where cooperation is the only evolutionary stable strategy, the red is where defection is the only ESS. The uncoloured regions have a fixed point of C-D dynamics; in the top right region of game space, the point is an attractor, in the bottom left it is a point of bifurcation. We can see that BD cannot create cooperation in the PD game, while DB creates a small slither on white where cooperation can co-exist with defection. In both conditions, we can see large effects for the Hawk-Dove game. In the non-transformed game, the only type of equilibria in HD is an attractive fixed point where cooperators and defectors co-exists. However, under both the BD and DB dynamics, regions of full cooperator and full defector dominance appear in the HD game. Similar for coordination games, usually there is only bifurcation around a fixed point, but under both BD and DB regions of all cooperators and all defectors appear. Thus, spatial structure can resolve the coordination equilibrium selection dilemma for some game parameters.

Hilbe, C. (2011). Local replicator dynamics: a simple link between deterministic and stochastic models of evolutionary game theory. Bull Math Biol, 73: 2068-2097.

Ohtsuki H, & Nowak MA (2006). The replicator equation on graphs. Journal of Theoretical Biology, 243 (1), 86-97 PMID: 16860343

Would other update rules fall in between those two in UV space, or might there be even wilder changes due to update rule?

IM falls in between them, as I suspect would the probabilistic rule I suggest. However, I don’t think you can reasonable take without making odd update rules.

without making odd update rules.

As far as I can tell, that term is related to the number of agents you sample to get the most important info for an update. It is unusual to look more than two steps away from a focal individual to calculate where his child goes, and the number of agents within two steps is and the finite-sampling discount factor according to Hilbe would go as

and the finite-sampling discount factor according to Hilbe would go as  , so having more than

, so having more than  of a discount seems unlike to me and DB achieves this.

of a discount seems unlike to me and DB achieves this.

However, I will think about it more carefully and try to expand on the ideas when I post about Hilbe's paper. Thanks for the suggestion!

Pingback: Social learning dilemma | Theory, Evolution, and Games Group

Pingback: Evolutionary games in set structured populations | Theory, Evolution, and Games Group

Pingback: Natural algorithms and the sciences | Theory, Evolution, and Games Group

Pingback: Game theoretic analysis of motility in cancer metastasis | Theory, Evolution, and Games Group

Pingback: Microenvironmental effects in prostate cancer dynamics | Theory, Evolution, and Games Group

Pingback: Edge effects on the invasiveness of solid tumours | Theory, Evolution, and Games Group

Pingback: Computational complexity of evolutionary stable strategies | Theory, Evolution, and Games Group

Pingback: Cooperation through useful delusions: quasi-magical thinking and subjective utility | Theory, Evolution, and Games Group

Pingback: Approximating spatial structure with the Ohtsuki-Nowak transform | Theory, Evolution, and Games Group

Pingback: Cataloging a year of blogging: cancer and biology | Theory, Evolution, and Games Group

Pingback: Space and stochasticity in evolutionary games | Theory, Evolution, and Games Group

Pingback: Seeing edge effects in tumour histology | Theory, Evolution, and Games Group

Pingback: Making model assumptions clear | CancerEvo

Pingback: Measuring games in the Petri dish | Theory, Evolution, and Games Group

Pingback: Spatializing the Go-vs-Grow game with the Ohtsuki-Nowak transform | Theory, Evolution, and Games Group

Pingback: Token vs type fitness and abstraction in evolutionary biology | Theory, Evolution, and Games Group

Pingback: Abstract is not the opposite of empirical: case of the game assay | Theory, Evolution, and Games Group

Pingback: Heuristic models as inspiration-for and falsifiers-of abstractions | Theory, Evolution, and Games Group

Pingback: Effective games from spatial structure | Theory, Evolution, and Games Group