Toward an algorithmic theory of biology

June 9, 2013 23 Comments

When you typically think of computer scientists working on questions in biology, you probably picture a bioinformatician. Although bionformatics makes heavy use of algorithms and machine learning, and its practitioners are often mildly familiar with computational complexity (enough to know that almost everything they study is NP-complete), it doesn’t really apply computational thinking to understand or building theories in biology. Instead, it often generates or analyzes petabytes of blind data that biologists subsequently use to generate or test traditional verbal or physics-inspired hypotheses. The 2nd workshop on Natural Algorithms and the Sciences took a completely different approach.

The workshop was held on May 20th and 21st by Princeton’s Center for Computational Intractability and attracted speakers from biology, computer science, engineering, math and elsewhere. The meeting had a heavy focus on theoretical computer science and a return to the founding spirit of Alan Turing by tackling big fundamental questions in the sciences. It saw applications of computational complexity, computability theory, machine learning, distributed and parallel computing, and information theory. Although the mandate of the workshop is broader than looking at biology, most of the talks returned to questions in the biological sciences. I greatly enjoyed my time at the workshop, and intended to live blog the event. However, a poor internet connection at my residence, other time commitments, and the vast amount of ideas I wanted to cover instead translated into a series of seven posts (this is the eighth) that spanned the last three weeks. To make reading (and later reference) easier, this post is a TL;DR of the last seven posts. Each section is a short summary of a post and a list of the talks discussed; at the end I include a partial bibliography for further reading. Click through on the headings to learn more and join the discussion on specific topics!

Natural algorithms and the sciences

I wrote this post the night before the workshop began, summarizing its aims and my excitement to attend. I explained the basics of natural algorithms and summarized the three broad directions of natural computing: (1) finding metaheuristics to solve engineering problems, (2) designing non-electronics based implementations for computers, and (3) using ideas of theoretical computer science to reason about natural and social phenomena or the theories that describe them. The workshop and subsequent posts focused almost exclusively on the third theme, since it is least developed and holds the most promise for scientific (as opposed to merely technological) progress. I closed the post by stressing the difference between the work to be presented and typical simulations, and sketched the distinction between Eulerian and Lagrangian approaches to modeling.

Algorithmic view of historicity and separation of scales in biology

Featuring summaries of the talks:

Featuring summaries of the talks:

- Albert Libchaber: “Experiments on artificial cells“,

- Leslie Valiant: “Algorithms for adaptive phenomena“,

- Simon Levin: “Bounded rationality and decision-making“,

- Naomi Ehrich Leonard: “Network topology and the evolution of leadership in collective migration“, and

- first part of Bernard Chazelle‘s opening remarks.

This post began with an obligatory tip-of-the-hat to the story of Thomas M.S. Chang and the creation of the first artificial cell before highlighting Libchaber’s modern experimental work on synthetic biology. To stress the difference between artificial and natural systems, Valiant looked at evolution as a special type of machine learning and how we can use computer science to study the question of a ‘designer’. I was concerned about Valiant’s reliance on static fitness landscapes; Levin mirrored my concern by starting his talk on the distinction between static and frequency-dependent fitness landscapes in evolution. However, he spent most of his time on questions of development and cognition, closing with herding behavior. Leonard continued the trend to higher orders of organization by looking at how individual cognition leads to the social learning dilemma of group migration. Chazelle generalized Leonard and Levin’s model into influence systems, and showed how it can be used to play with separations of timescales.

Big thanks to the editors at ScienceSeeker.org! They enjoyed this post enough to feature it in their picks.

Computer science on prediction and the edge of chaos

Featuring summaries of the talks:

Featuring summaries of the talks:

- Joel Lebowitz: “Microscopic models of macroscopic behavior“,

- Cris Moore: “Universality, hardness, engineering, and messiness“,

- Mark Braverman: “Noise versus computational intractability in dynamics“, and

- second part of Bernard Chazelle’s opening remarks.

We can think of physicists working on statistical mechanics as the first agent-based modellers. Lebowitz sketched how mathematical physics are still continuing their quest (at least for some systems) to rigorously show that macrodynamics fatefully reproduce the aggregate behavior of the microstates. Moore showed that when it comes to computational systems, the tools of statistical mechanics are inadequate to distinguish drastically different problems like 3SAT and 3XOR. Along with Braverman, he considered fundamental obstacles to prediction such as the system being complete for its complexity class, universal, or chaotic. Thankfully, Braverman and Chazelle discussed that the obstacle of universality is often a knife-edge phenomena, and Moore suggested that we can overcome it with more recent cstheory tools like smoothed analysis.

Distributed computation in foraging desert ants

Featuring summaries of the talks:

Featuring summaries of the talks:

- Ofer Feinerman: “Fighting noise with limited resources: an ant colony perspective“, and

- Amos Korman: “Integrating theoretical algorithmic ideas in empirical biological study“

Feinerman and Korman work together to combine experiment and computer science theory in studying desert ants. Since these ants do not use pheromone trails to communicate, they must coordinate in the ant hill before heading out for foraging. As such, they are the perfect candidates for looking at distributed computing. Korman sketched a general framework we can use for combining cstheory with natural experiments, and connecting biological parameters by using information-theoretic lower-bounds from computer science. The ant experiments make for some awesome videos, and I recommend watching them all on Feinerman’s research page.

This post caught the attention of WordPress Story Wrangler Cheri Lucas Rowlands. She was kind enough to feature it on the Freshly Pressed section of the WordPress frontpage. Thank you!

Mathematical models of running cockroaches and scale-invariance in cells

Featuring summaries of the talks:

Featuring summaries of the talks:

- Phil Holmes: “The neuro-mechanics of running cockroaches: How much natural detail do we need?“, and

- Eduardo Sontag: “Some invariant aspects of dynamical behavior of cell signaling pathways“, or “Transient ‘phenotypes’ for biomolecular model discrimination“

In this post, I introduced the basic distinction between models as abstractions, heuristics, and insilications. Holmes and Sontag’s work is a great example of insilications. Much like we are used to in physics, their applied math work is detailed enough to provide quantitative predictions that are directly testable against the relevant biology. Both speakers stressed the importance of symmetries and invariants for studying dynamic systems, and Sontag’s work provided a useful theorem for detecting scale-invariance of response curves for the giant systems of ODEs used to model cells. After 15 years of experience modeling how cockroaches run, Holmes stressed an important general note for all mathematical modelers:

[We] still don’t understand non-linear dynamics beyond linearizations or general symmetries … [and] it is not clear which details matters a priori.

Microscopic computing in cells and with self-assembling DNA tiles

Featuring summaries of the talks:

Featuring summaries of the talks:

- Luca Cardelli: “The cell cycle switch computes approximate majority“,

- Jack Lutz: “Parametrizing self-assembly“,

- Rebecca Schulman: “Universal molecular algorithms for learning and pattern formation“

This was the only series of talks that was not focused exclusively on using cstheory to understand natural sciences, but also on implementing computing or assembling technologies using insights gleaned from biology. Caredlli simulated and analyzed cellular computational circuits that — for computer scientists — define biology to show that they accurately implement the approximate majority algorithm from distributed computing. Lutz and Schulman looked even deeper inside the cell, to see how they can harness the self-assembly of DNA tiles to build simple nanoscale biological replicators. Lutz concentrated on the theory of faithful simulation of Turing Machines by DNA tiling systems. Schulman looked at how to experimentally realize these simple self-replicating systems. I was surprised to learn that DNA tile self-assembly can be run in the wet-lab!

Machine learning and prediction without understanding

Featuring a summary of the talk:

Featuring a summary of the talk:

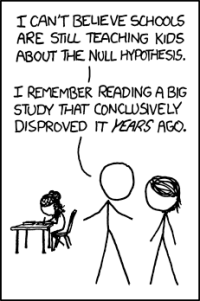

- Ishanu Chattopadhyay: “Data smashing: Finding causal similarity in natural data sets“

This was the only purely machine learning talk of the workshop. Since the goal of many talks at the meeting was to look at how to build theory and understanding in biology, I used this post as an opportunity to turn to some philosophy of science. Is big data good for us? Or will it stifle progress in the long term? For theoria, I asked if the proper versus improper learning distinction in computational learning theory can help frame the philosophical discussion over the merit of understanding versus prediction. For practica, I sketched the ideas behind Chattonpadhyay’s approach to anomaly detection directly with streams without explicitly learning the underlying probabilistic finite state automata.

This was the most popular post of the series on reddit, twitter, and Google Plus. It became TheEGG’s most popular post with 3890 views to date. At the time of this summary, all seven previous posts together garnered around 7.8 thousand views and hopefully helped popularize the algorithmic lens among readers!

Partial Bibliography

Barish, R. D., Schulman, R., Rothemund, P. W., & Winfree, E. (2009). An information-bearing seed for nucleating algorithmic self-assembly. Proceedings of the National Academy of Sciences, 106(15), 6054-6059.

Cardelli L, & Csikász-Nagy A (2012). The cell cycle switch computes approximate majority. Scientific Reports, 2

Chattopadhyay, I., Wen, Y., & Ray, A. (2010). Pattern Classification In Symbolic Streams via Semantic Annihilation of Information. American Control Conference arXiv: 1008.3667v1

Chazelle, B. (2012). Natural algorithms and influence systems. Communications of the ACM, 55 (12).

Doty, D., Lutz, J. H., Patitz, M. J., Schweller, R. T., Summers, S. M., & Woods, D. (2012). The tile assembly model is intrinsically universal. In Foundations of Computer Science (FOCS), 2012 IEEE 53rd Annual Symposium on (pp. 302-310). IEEE.

Feinerman, O., & Korman, A. (2012). Memory Lower Bounds for Randomized Collaborative Search and Implications to Biology. 26th International Symposium on Distributed Computing (DISC)

Pais, D., & Leonard, N. (2013). Adaptive network dynamics and evolution of leadership in collective migration Physica D: Nonlinear Phenomena DOI: 10.1016/j.physd.2013.04.014

Schulman, R., & Winfree, E. (2005). Self-replication and evolution of DNA crystals. In Advances in Artificial Life (pp. 734-743). Springer Berlin Heidelberg.

Shoval O, Goentoro L, Hart Y, Mayo A, Sontag E, & Alon U (2010). Fold-change detection and scalar symmetry of sensory input fields. Proceedings of the National Academy of Sciences of the United States of America, 107(36): 15995-6000.

Valiant, L.G. (2009) Evolvability. Journal of the ACM 56(1): 3.

Pingback: Workshop: Natural Algorithms and the Sciences — May 20-21, 2013

Great series of posts, thank you!

Thanks for reading! I am glad you enjoyed them. If you have any questions about the posts or want me to expand on some of the topics touched on then let me know. Always happy to have return readers and ideas for new posts.

I must say, your blog has been one of the tilting forces upon influencing my decision to return to graduate school and pursue research on my passion, the intersection of theoretical computer science and the natural sciences. Whether you should be congratulated or admonished for that remains to be seen :).

I’m going to start a new blog of my own very soon writing about such topics, hopefully I can be as interesting as you have been!

That means a lot to me, I am glad I could be helpful. I think it is important to view grad school as an intellectual journey and not a means to an end.

Send me a link to your blog when you start it up, I don’t have a blogroll here but I do like to read and comment on other blogs. If you need any advice on starting it up, or if you want to contribute some posts under your real name on this blog then let me know. I like the discussion you engaged in on the physical constants post.

Pingback: Monoids, weighted automata and algorithmic philosophy of science | Theory, Evolution, and Games Group

Pingback: Stats 101: an update on readership | Theory, Evolution, and Games Group

I’ve been reading Chaitin’s book ‘Proving Darwin’, which I picked up based on your reviews and critiques. I’m inclined to agree with some of what you said, and at the same time, I think his basic approach makes a lot of sense, and hopefully mathematicians will expand on the ideas.

My own area is physical chemistry, and what I think would be interesting would be to do something akin to statistical mechanics of ensembles of models. I do think that the questions you raise about the lack of competition and the directedness of evolution that seems to be inherent in Chaitin’s approach may well need addressing, though as a first pass, I am still pretty impressed with what he is doing.

Anyhow, while I am practicing science in a totally different context, I have come to really enjoy this blog and the kinds of things it addresses. It makes me want to think harder about this stuff, and to see how I can use things like Mathematica to illuminate it.

– Dave E

Thank you very much for this comment and encouraging words! I am always happy to learn that people are following this blog :D.

If you like the basic approach of Chaitin’s book then I recommend taking a look at Valiant’s Probably Approximately Correct: Nature’s algorithms for learning and prospering in a complex world. It is a relatively new book, and I enjoyed it much more than Chaitin, although some critiques carry over (and I mention some of them in this post as a response to his talk). In terms of computer science and mathematics it is a much more interesting (although a little more involved, but still accessible to non-computer scientists, I think) read. I haven’t had a chance to review it, yet, but will probably write about it in the coming weeks because I will be preparing a related paper for submission.

I don’t completely understand your comment about “statistical ensembles of models”, but Valiant’s work will come closer to that since it is based on machine learning and thus closer to stat mech.

If you want to discuss Chaitin’s book in more detail as you read through it then I would be very happy if you started a discussion on the original review or technical follow-up. You can also always reach me by G+ or email.

Pingback: Computational complexity of evolutionary equilibria | Theory, Evolution, and Games Group

Pingback: What can theoretical computer science offer biology? | Theory, Evolution, and Games Group

Pingback: What is the algorithmic lens? | Theory, Evolution, and Games Group

hi ak. “Although bionformatics makes heavy use of algorithms and machine learning, and its practitioners are often mildly familiar with computational complexity (enough to know that almost everything they study is NP-complete), it doesn’t really apply computational thinking to understand or building theories in biology.”

it seems to me the hardcore/pro/purist CS theorists tend to twist themselves in knots excluding various areas of CS that seem quite theoretical & your blog goes in that direction. what exactly does “theoretical” mean? I would say quite to the contrary, and to me its obvious, that in bioinformatics and many other fields, practitioners often make heavy use of algorithms and machine learning to understand and build theories!…. there is some confusion on the nature of datamining. “Instead, it often generates or analyzes petabytes of blind data that biologists subsequently use to generate or test traditional verbal or physics-inspired hypotheses.” how is a “verbal or physics inspired hypothesis” not a theory?

also some people seem to be trying to discriminate more classic statistics based approaches from more newer machine-learning based approaches, and again I say theres a point where the black and white blurs into indistinguishable shades of gray, and that its almost like listening to grown scientists talk about the power of Batman versus Superman….. so, I have seen these debates, understand some of the motivation, but at heart they start to make no sense to me and seem only to be about people with both similar/different backgrounds attempting to rule out remarkably common/unifying ground…. & feel its scientists attempting to come to grips with a new kuhnian paradigm shift and minimizing/downplaying that what is genuinely new wine and new bottles is actually old wine in new bottles or new wine in old bottles!

intend to blog on this in the near future on the topic of datamining etc. … I think both some confusion/insight comes from a breathless essay written by Anderson for wired magazine on the subject. however here is a blog I wrote not long ago mainly about datamining the presidential election

Pingback: Cataloging a year of blogging: the algorithmic world | Theory, Evolution, and Games Group

Pingback: Evolution is a special kind of (machine) learning | Theory, Evolution, and Games Group

Pingback: Computational theories of evolution | Theory, Evolution, and Games Group

Pingback: Why academics should blog and an update on readership | Theory, Evolution, and Games Group

Pingback: Algorithmic Darwinism | Theory, Evolution, and Games Group

Pingback: Cataloging a year of blogging: cancer and biology | Theory, Evolution, and Games Group

Pingback: Counting cancer cells with computer vision for time-lapse microscopy | Theory, Evolution, and Games Group

Pingback: From perpetual motion machines to the Entscheidungsproblem | Theory, Evolution, and Games Group

Pingback: Four stages in the relationship of computer science to other fields | Theory, Evolution, and Games Group

Pingback: The science and engineering of biological computation: from process to software to DNA-based neural networks | Theory, Evolution, and Games Group